Artificial intelligence (AI) is proving to be one of the most influential and game-changing technology advancements in the business world. As more and more enterprises go digital, companies all over the globe are constantly engineering new ways to implement AI-based functions into practically every platform and software tool at their disposal. As a natural consequence, however, cybercriminals too are on the rise, and view the increasing digitization of business as wide open window of opportunity. It should come as no surprise, then, that AI is affecting cybersecurity – but it’s affecting it in both positive and negative ways.

The Demand for Security Professionals

Cybercrime is a massively lucrative business, and one the greatest threats to every company in the world. Cybersecurity Ventures’ Official 2019 Annual Cybercrime Report predicts cybercrime will cost the world $6 trillion annually by 2021 – up from $3 trillion in 2015. Cybercrime is creating unprecedented damage to both private and public enterprises, driving up information and cybersecurity budgets at small, medium and large businesses alike, as well as governments, educational institutions, and organizations of all types globally. Indeed, CV’s Cybersecurity Market Report also forecasts that global spending on cybersecurity products and services will exceed $1 trillion cumulatively from 2017 to 2021 – a 12% to 15% year-over-year market growth over the period.

As such, cybersecurity professionals are in high demand – cybercrime is expected to triple the number of job openings to 3.5 million unfilled cybersecurity positions by 2021, up from 1 million in 2014, with the sector’s unemployment rate remaining at 0%.

This drastic employee shortfall is creating an opportunity for AI solutions to help automate threat detection and response. With such strained resources, security professionals are some of the most hardworking employees around. AI can ease the burden, automate repetitive and tiresome tasks, and potentially help identify threats more effectively and efficiently than other software-driven approaches.

How AI Is Improving Cybersecurity

Cyber threat detection is in fact one of the areas of cybersecurity where AI is proving the most useful and gaining the most traction. Machine learning-based technologies are particularly efficient at detecting unknown threats to a network. Machine learning is a branch of AI where computers use and adapt algorithms depending on the data received, learn from this data, and improve. In the realms of cybersecurity, this translates into a machine that can predict threats and identify anomalies with greater accuracy and speed than a human equivalent would be able to – even one using the most advanced non-AI software system.

This is a marked improvement over conventional security systems, which rely on rules, signatures and threat intelligence for detecting threats and responding to them. However, these systems are essentially past-centric, and are built around what is already known about previous attacks and known attackers. The problem here is that cybercriminals are able to create new and innovative attacks which exploit the inherent blind spots in the various systems. What’s more, the sheer volume of security alerts a company has to deal with on a daily basis is often too much for resource-stretched security teams to handle when relying on conventional security technology and human expertise alone.

Advancements in AI, however, have led to the production of much smarter and autonomous security systems. With machine learning applied, many of these systems can learn for themselves without the need for human intervention (unsupervised), and keep pace with the amount of data that security systems produce. Machine learning algorithms are exceptionally good at identifying anomalies in patterns. Rather than looking for matches with specific signatures – a traditional tactic that modern-day attacks have all but rendered futile – the AI system first makes a baseline of what is normal, and from there dives deep into what abnormal events could occur to detect attacks.

Another approach in machine learning is to use supervised algorithms, which detect threats based on labelled data – crudely, “malware” vs “not malware” – they have been trained on. Based on the labelled data, the system can make decisions about new data, and determine whether it is malware or not. Thousands of instances of malware code can be used as learning data for supervised algorithms to learn from, creating an extremely efficient system for detecting incoming threats.

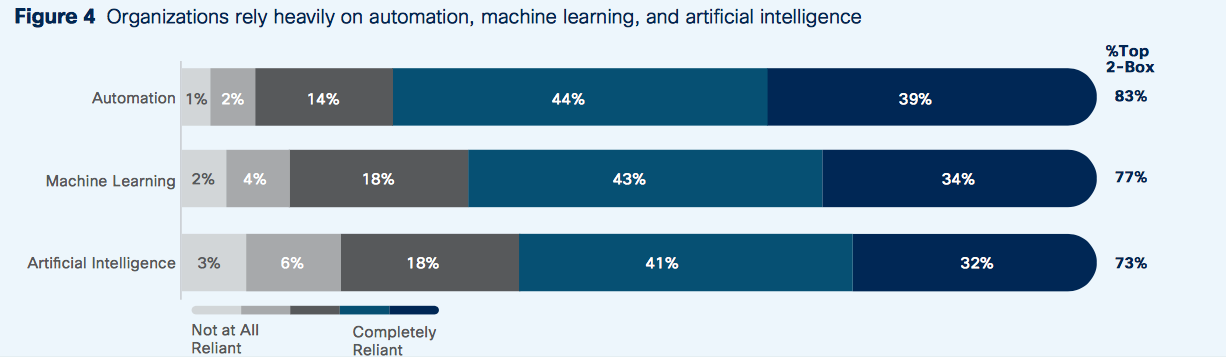

Today, more and more organizations are relying on automation, machine learning and artificial intelligence to automate threat detection – some entirely so. According to Cisco’s 2018 Security Capabilities Benchmark Study, 39% of organizations completely rely on automation to detect cyber threats, while 34% completely rely on machine learning, and 32% completely rely on AI.

(Image source: cisco.com)

The Malicious Use of Artificial Intelligence

This all seems very promising for security professionals working today. But AI isn’t only a force for good, and can in fact be used to help cybercriminals achieve their goals as much as it can security teams.

In a recently-published report – The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation – a panel of 26 experts from the US and UK identify many instances where AI can be weaponized and used to augment and upscale cyberattacks.

One of the biggest issues highlighted is that AI can be used to automate attacks on a truly massive scale. Attackers usually rely on workforces of their own to coordinate attacks. But, by utilizing AI and recruiting vast armies of machine learning-powered bots, things like IoT botnets will become a much larger threat. What’s more, the costs of attacks can be lowered by the scalable use of AI systems to complete tasks that would otherwise require human labor, intelligence and expertise. Much like AI may be a solution to the cybersecurity talent shortage, so too may it be a solution to talent shortages in the cybercriminal underworld.

In all, the report identifies three high-level implications of progress in AI in the threat landscape – the expansion of existing threats, the introduction of new threats, and the alteration of the typical character of threats.

For many familiar attacks, the authors expect progress in AI to expand the set of actors who are capable of carrying out an attack, the rate at which these actors can carry it out, and the set of plausible targets. “This claim follows from the efficiency, scalability, and ease of diffusion of AI systems,” they say. “In particular, the diffusion of efficient AI systems can increase the number of actors who can afford to carry out particular attacks. If the relevant AI systems are also scalable, then even actors who already possess the resources to carry out these attacks may gain the ability to carry them out at a much higher rate. Finally, as a result of these two developments, it may become worthwhile to attack targets that it otherwise would not make sense to attack from the standpoint of prioritization or cost- benefit analysis.”

Progress in AI will also enable a new variety of attacks, according to the report. These attacks may use AI systems to complete certain tasks more successfully than any human could, or take advantage of vulnerabilities that AI systems have. For example, voice is now being increasingly used as an identification method. However, there has recently been developments in speech synthesis systems that learn to imitate individuals’ voices, and it’s feasible that these systems could be used to hack into systems protected by voice authentication. Other examples include exploiting the vulnerabilities in AI systems used in things like self-driving cars, or even military weaponry.

The authors of the report also expect the typical character of threats to shift in a few distinct ways – namely that they will be especially effective, finely targeted, and even more difficult to attribute. For example, attackers frequently trade-off between the frequency and scale of their attacks on the one hand, and their effectiveness on the other. Spear phishing, for instance, is more effective than regular phishing, which does not involve tailoring messages to individuals, but is relatively expensive to carry out en masse. AI systems can be trained to tailor spear phishing email messages, making them much more scalable, effective and successful.

There is also the very real possibility of attackers exploiting the vulnerabilities of AI-based security defense systems. For example, in the case of supervised machine learning, an attacker could potentially gain access to the training data and switch labels so that some malware examples are tagged as clean code by the system, nullifying its defenses.

Final Thoughts

AI is certainly something of a double-edged sword when it comes to security. While solutions that utilize AI and machine learning can greatly reduce the amount of time needed for threat detection and incident response, the technology can also be used by cybercriminals to increase the efficiency, scalability and success-rate of attacks, drastically altering the threat landscape for companies in the years ahead. There is indeed an arms race playing out as you read this. Undoubtedly AI will prove to be boon to cybersecurity over the coming years – and it needs to be, because AI is also opening up whole new categories of attacks that organizations will have to be equipped to deal with very soon.

AI and Cybersecurity

AI is affecting cybersecurity in both positive and negative ways. Cyber threat detection is in fact one of the areas of cybersecurity where AI is proving the most useful and gaining the most traction. Machine learning-based technologies are particularly efficient at detecting unknown threats to a network. Machine learning is a branch of AI where computers use and adapt algorithms depending on the data received, learn from this data, and improve. In the realms of cybersecurity, this translates into a machine that can predict threats and identify anomalies with greater accuracy and speed than a human equivalent would be able to – even one using the most advanced non-AI software system.